Why Claude 3.7 Could Preserve Programming Language Diversity. Yes, RStudio is a Top 10 IDE

How the Latest AI Models Are Changing the Way We Code (But Not Necessarily What We Code With)

Hello Ducktypers,

What a week for AI coding assistants! Unless you've been hiding under a rock (or buried in legacy code), you probably noticed that Anthropic dropped a couple of days ago Claude 3.7 Sonnet and Claude Code, triggering the predictable LinkedIn storm of hot takes, skepticism, and "I'm feeling old" comments.

And before we get started, don't forget to join AI Product Engineer. We are the community building the AI products of the future! Together, we are learning how to work with AI agents and how to maximize all the latest models being released on a semi-weekly rhythm.

Being familiar with the latest releases is important because, beneath all the noise, something fascinating is happening: AI is not just changing how we code — it might actually preserve programming language diversity in an unexpected way. Let me explain...

RStudio's Resilience & The Language Loyalty Effect

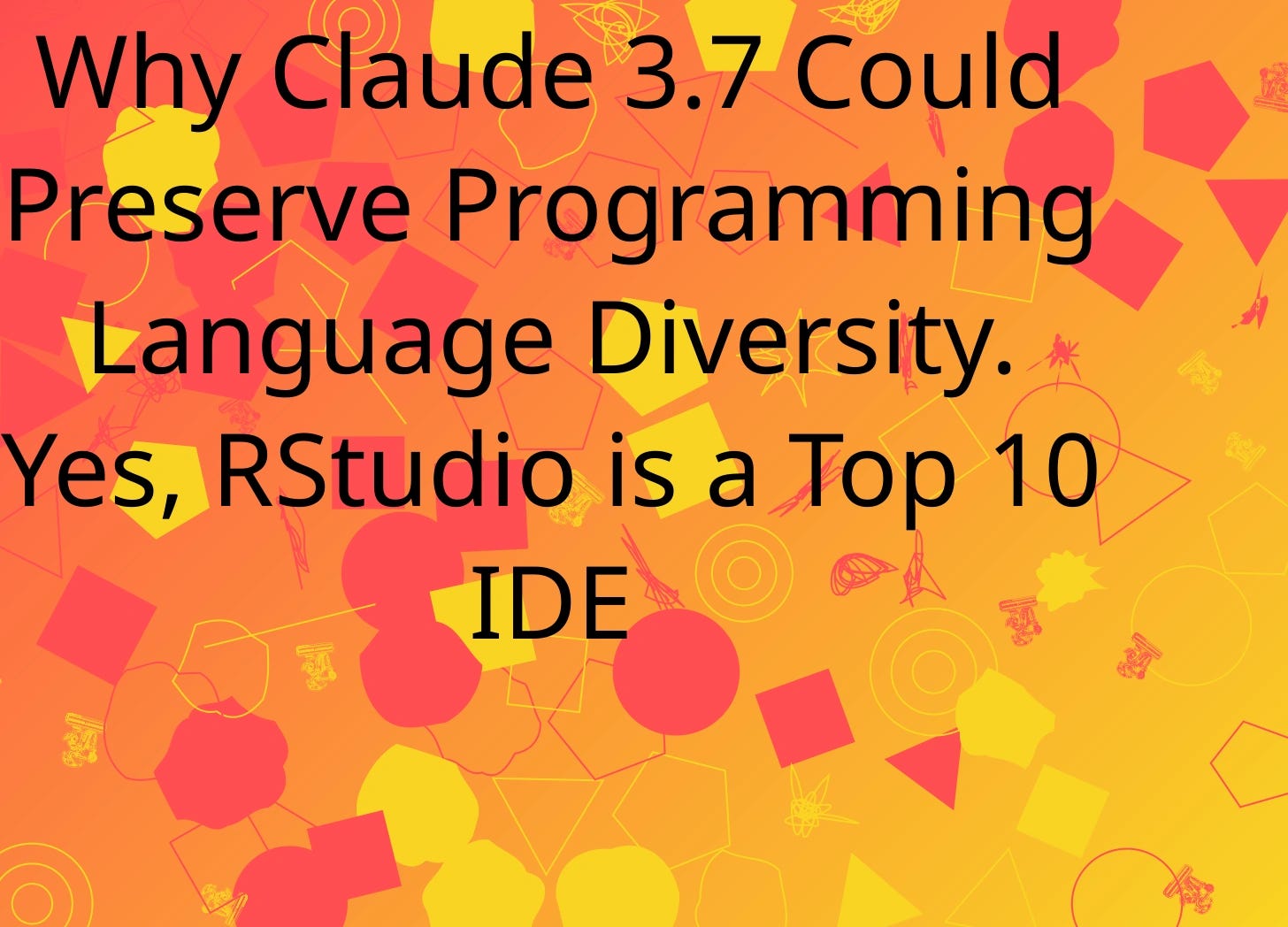

I was looking for the most popular IDE on the market and unsurprisingly it is Visual Studio and VS Code according to a source that I found online based on Google searches. But what caught my attention was that RStudio is among the top 10 IDEs.

For those who are not aware, RStudio is the default programming environment for the programming language R. And if you are doing scientific computing, it is great. To this day, I believe that nothing in the Python world comes close to the user experience that RStudio provides.

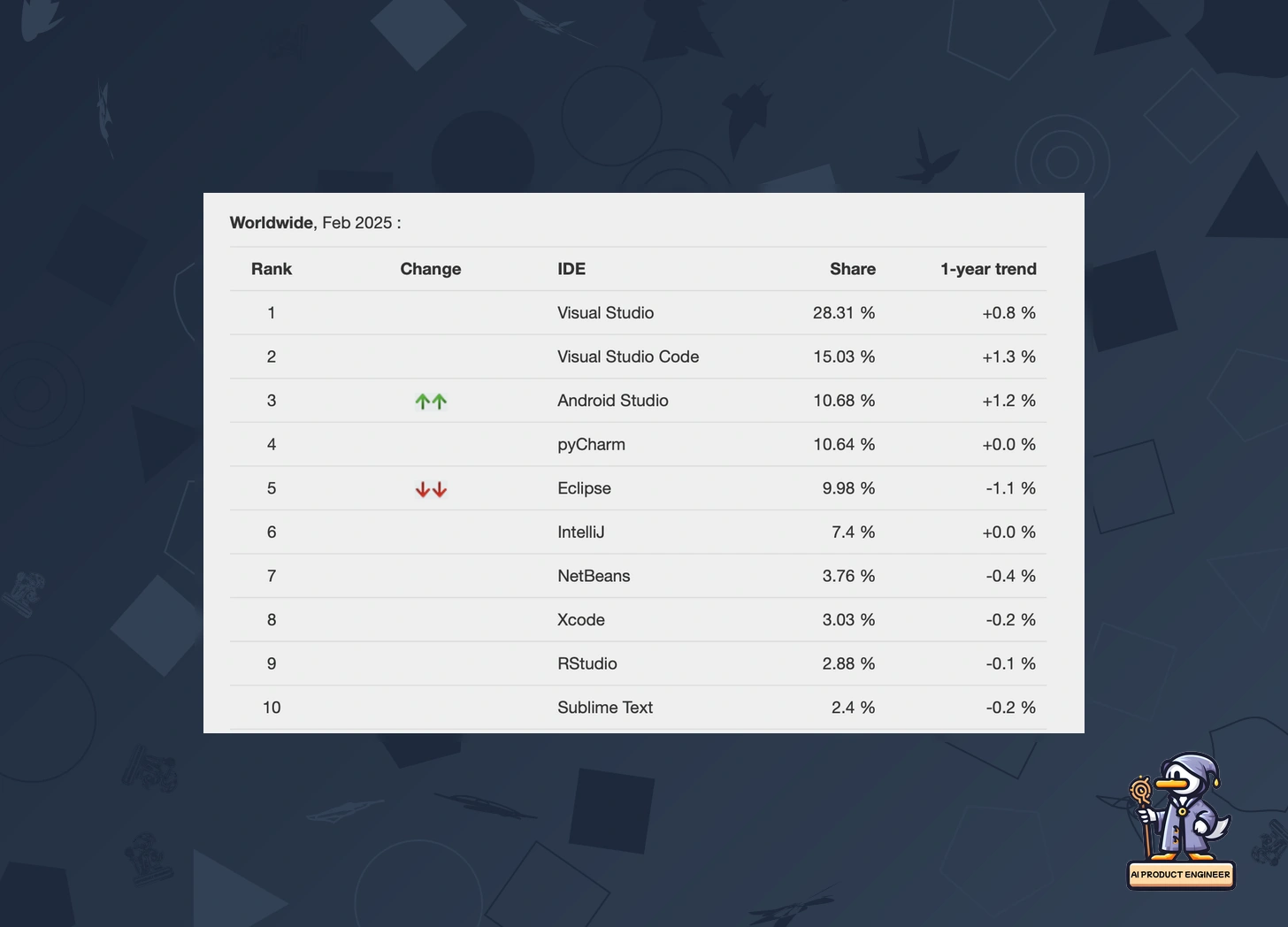

Out of curiosity, I went to check its popularity on Google Trends, and we can see that yes, VS Code and PyCharm keep growing while RStudio had a period of growth up to 2022. It seems that since then, it has remained stable and stopped growing.

Overall, this is fantastic news for those who claim that there is no benefit in learning R today or that the only alternative for doing data analysis is working with Python. That is far from correct, as RStudio shows.

The AI Coding Assistant Revolution

So what does RStudio's resilience have to do with this week's Claude 3.7 Sonnet release? Everything.

As Peter Gostev, Head of AI at Moonpig, observed about recent AI coding progress:

"I personally felt a huge shift between coding with Sonnet 3.5/GPT-4o and o1-pro - it felt like going from a fairly capable but flaky partner to someone who can basically do the work for you."

This shift is reaching a tipping point with Claude 3.7, which is showing remarkable coding capabilities. Louis Gleesonshared a striking example: Claude 3.7 created "this Minecraft clone in one prompt, and made it instantly playable in artifacts." While some commenters noted it was "basically a tree zooming app" rather than a full game, the demonstration shows just how accessible coding has become.

The Benchmark Wars Are Getting Tiresome

Of course, with every AI announcement comes the inevitable benchmark competition. As DataXLR8.ai keenly noted:

"The AI benchmark wars are getting embarrassing... What Anthropic announced: Claude 3.7 beats everything! 'Extended thinking' (pausing), Code that actually works, Reasoning that makes sense. What they didn't mention: Pricing (hint: not cheap), How they measured 'beats everything', Independent verification, Real user workflows."

The skepticism about benchmarks is widespread among practitioners. Jean Ibarz put it bluntly: "Its crazy how much these benchmarks are not representative of reality. The best non reasoning model for coding is not gemini-2.0-flash but Claude Sonnet 3.5, and everyone serious about it knows that."

Even Peter Gostev, who uses benchmarks in his analysis, admits their limitations: "I used Deep Research to gather some of the benchmark data... the results were pretty hit & miss... even once it read the page wrong so got the wrong number."

From Benchmarks to Real-World Workflows

What is fascinating in the LinkedIn discourse is how quickly the conversation has evolved from abstract benchmarks to concrete workflows and use cases.

Hugi Ásgeirsson described a sophisticated approach:

"I use prelude to build a context and ask o1-pro to work out a plan... I then copy that plan to a markdown document and add it to the context with Aider, and use o3-mini-high as an engineer model that writes code based on the plan."

Carlos Chinchilla offered another ecosystem insight:

"I have been using Cursor + Claude for the last year everyday... it really outperforms GPT on the code it generates. Curiously, when I then take the same question to ChatGPT or Claude Web, I get better results than sometimes using Cursor + GPT."

These comments reveal something crucial: success with AI coding assistants is not about which model has the highest benchmark score, but about how effectively they are integrated into developers' workflows.

The Language Loyalty Effect

Here is where we connect the dots back to RStudio and programming language choice:

It is likely that as we continue developing more using AI tools, we will stick to the programming languages that we already know and like. Often, we decide to switch languages because it becomes cumbersome or inefficient to do something with a specific language, and we're attracted by the ease of use of another one.

Why were people learning Ruby in 2005? Likely, primarily due to Ruby on Rails. If the creation of code becomes easier and easier, as we slowly transition to prompting, vibe coding and similar approaches, then likely, we will stick to our favorite tools and the AI will help us overcome the limitations of the model itself.

This is where Said Bashin's comment about Claude 3.7 becomes particularly relevant: "Giving users control over response depth is a smart move — sometimes you need speed, sometimes depth." This user control might be the key to making AI assistants adapt to our preferred languages and workflows, rather than forcing us to adapt to them.

Specialization Over Generalization

Jafar Najafov's comment perfectly captures this trend toward specialization:

"Claude for coding. ChatGPT for research. Chapple AI for writing. Grok for latest developments."

The AI landscape is becoming more specialized, with different models finding their niches rather than competing for universal superiority. This specialization could actually help preserve programming language diversity by letting developers use the right AI assistant for their preferred language.

Overcoming Language Limitations

In recent years, one major limitation of a programming language such as R has been the limited support for deep learning and the lack of an equivalent to PyTorch or TensorFlow to develop models at a research level. But what if the AI is able to circle around that limitation for us?

As Harry Munro aptly noted:

"With these latest models we will enable a whole bunch of people who have solid general engineering skills to overcome the 'coding skill' barrier to entry to building decent software."

This democratization works both ways — it not only helps non-coders start coding but also helps experienced developers stick with their preferred languages even when facing domain-specific challenges.

The Real Challenge: That Last 30%

Despite all the enthusiasm, Brad Davis reminds us of the practical challenges:

"I have tried various ChatGPT iterations and Claude Sonnet for programming and left unimpressed... The speed gains I get from writing boilerplate are quickly lost when debugging... It gets it right about 70% of the time but the other 30% of the time it's crazy rabbit holes."

Similarly, Igor Halperin observed that Claude 3.5 "sometimes did very stupid things, including occasionally making its own code progressively worse and worse."

These failure modes highlight an important reality: AI coding assistants still struggle with debugging and complex reasoning, which is precisely where human expertise in a specific language becomes most valuable.

So Where Do We Go From Here?

The future of coding isn't about which language "wins" or which model has the highest benchmark score. It's about building effective human-AI collaborations that augment our capabilities without forcing us to abandon our expertise.

As the FOMO-inducing pace of AI development continues (Maja Voje quipped: "Just last week Grok 3 was the best 🤠 Every day in AI makes me feel like 5 years older"), we might find that the best approach is James Brooks' pragmatic one: "I tend to worry less about the latest updates across each AI and focus on the core tools that deliver on my objectives."

For many of us, that means sticking with the languages and tools we know best while leveraging AI to overcome their limitations. And whether you are an R enthusiast, a Python devotee, or a Typescript junkie, the good news is that Claude 3.7 and similar models are getting better at speaking your language — whatever it might be.

But having a model speak your language is not enough if you don't know how to build AI apps with them. So! Have you already signed up at aiproduct.engineer to start learning how to build AI products with agentic workflows? What are you waiting? Sign up!

In the meantime, let's catch up again next week to discuss what is happening in Gen AI.

Until next time,

Rod

P.S. Spotted an interesting AI discussion on LinkedIn? Tag AIProduct.Engineer in the comments and your insights might be featured in our next issue!